During the last DEF CON 31, a security researcher (James Kettle, aka “albinowax”) presented a new technique called “single-packet attack” that can be used to exploit Web race condition vulnerabilities in a clever and innovative way. This technique relies on HTTP/2 capabilities and I decided to explore how it works under the hood, by directly looking at the HTTP traffic using Wireshark.

A brief history of the HTTP protocol

The first documented version of HTTP (HTTP/1.0) was released in 1996. HTTP is an application layer protocol based on TCP and the first implementation of HTTP was pretty simple: for each resource that the client needs, it creates a new separate TCP connection (also known as “short-lived connections”).

For the early stages of the Web, this was enough because at that time, each website was basically a static HTML page with few resources to load and hyperlinks.

But then the Web rapidly evolved and became more complex, so already in 1997 a new version of HTTP was released (HTTP/1.1). This version had multiple specification updates and one of the most important feature was the ability to reuse existing TCP connections (also referred as “persistent connections”). Basically using the HTTP header Connection: keep-alive (which is the default in HTTP/1.1) it is possible to keep the TCP connection open and subsequent requests to the same server can use this connection. This mechanism improves performances by reducing the overhead of establishing a new TCP connection for each resource that the clients need to download. However, the HTTP requests still need to be serialized on the same connection.

HTTP/1.1 also introduced the HTTP pipelining: with this technique, it is possible to send several HTTP requests without having to wait for the corresponding HTTP responses (which is what happens in persistent connections) hence reducing the network latency.

However this mechanism has several limitations:

- it can be used only for idempotent HTTP methods (like

GET,HEAD,PUT, andDELETE) because these are methods that don’t have side effects if sent twice (due to network retransmissions/issues) - it’s hard to implement and it’s susceptible to the “head-of-line” problem (if the number of allowed parallel requests have been reached, subsequent requests must wait until the former ones complete)

- using pipelining with HTTP proxies can create several problems that might be hard to troubleshoot

For these reasons, HTTP pipelining has never been broadly adopted and most browsers decided to disable it by default due to the high number of bugs and poorly implementation server side.

To overcome the performance bottleneck of HTTP/1.1, in 2015 HTTP/2 (which is derived from a Google experimental protocol called SPDY) was published together with its specifications. HTTP/2 maintains compatibility with HTTP/1.1 meaning that any existing application can work with HTTP/2 without any change.

HTTP/2 works with streams: a stream is a bidirectional flow of a bytes of a single TCP connection. Each stream (identified by a stream ID) can carry multiple frames which are the smallest information unit on HTTP/2. There are several types of frames (for example HEADER and DATA frames) which carry out the information in a binary format (rather than in a text format as in HTTP/1.x). One or more frames create a message, which is a complete HTTP request or HTTP response.

Among the several improvements (i.e. compression of HTTP headers, server PUSH feature, etc..) this version introduced the concept of multiplexing: client and server can use a single TCP connection to send multiple concurrent streams, hence removing the “head-of-line” problem (at least at the application layer) and drastically increasing the performance.

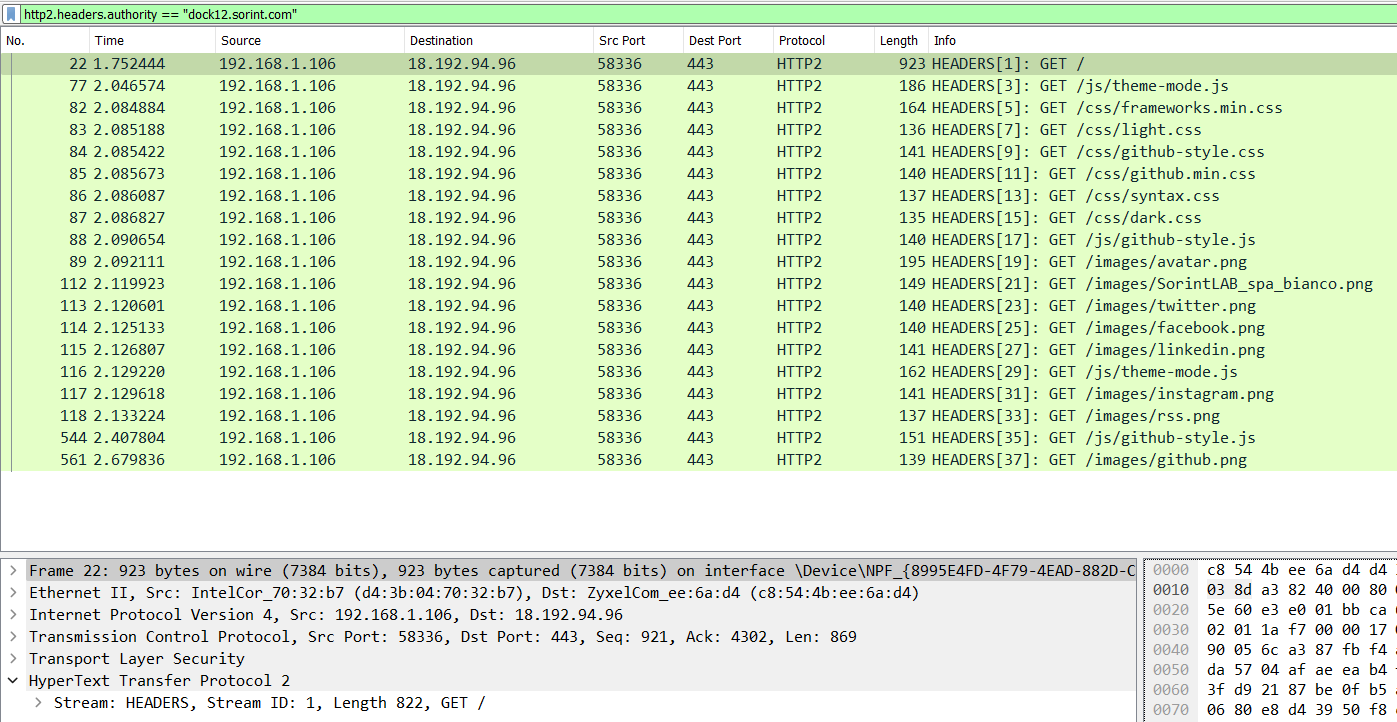

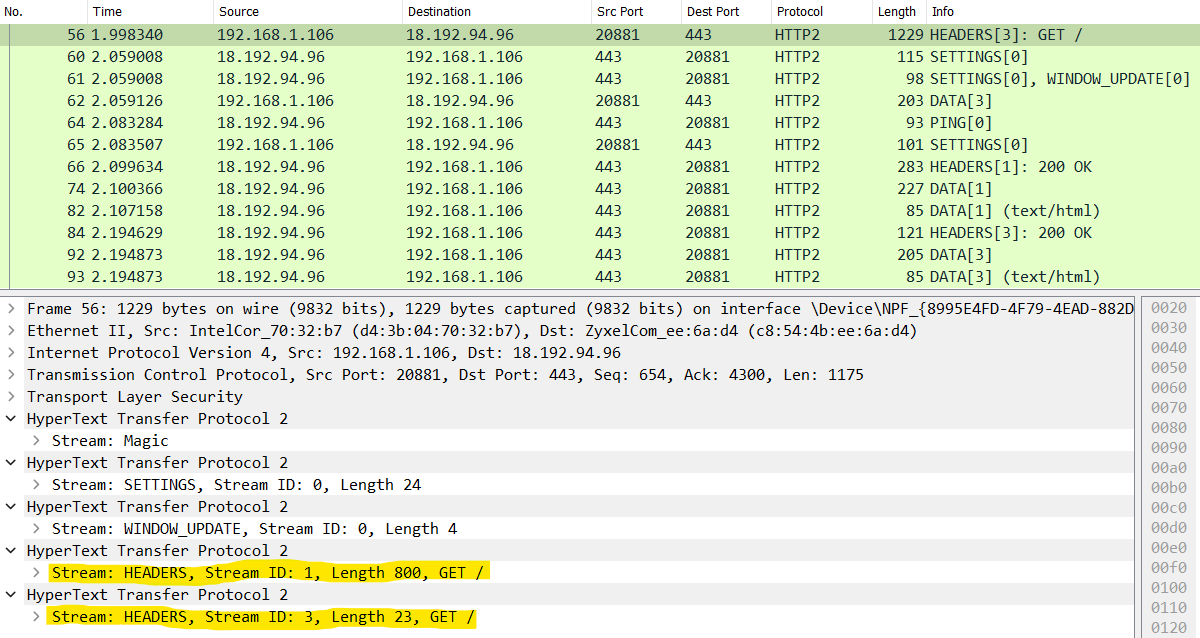

We can see the multiplexing in action in the following Wireshark capture.

We can see that all the resources required to load the page ( https://dock12.sorint.com ) are obtained by using just 1 TCP connection (with source port 58336 and destination port 443) using the multiplexing feature. Notice the different stream IDs for each frame (in this case we only have HEADER frames coming from the client as the HTTP method is always GET).

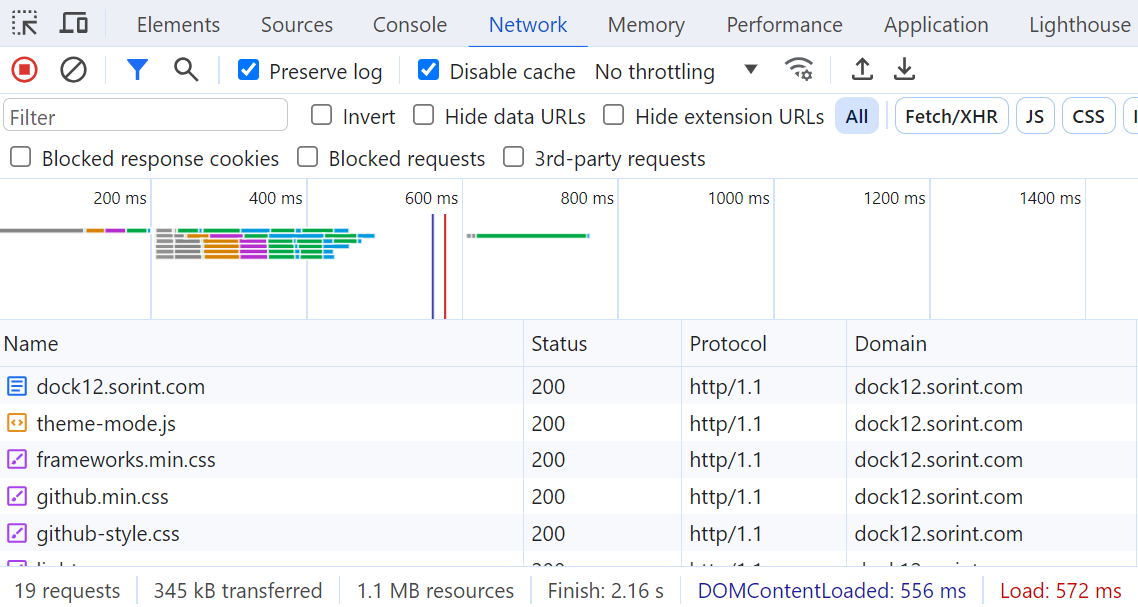

We can also use the developer tool to see the difference between HTTP/1.1 and HTTP/2 when loading the same website.

When using HTTP/1.1 we have several connections from the client to the server (most browsers usually open 6 concurrent connections to a single domain in order to speed up the page loading process):

When using HTTP/2 the browser only establishes 1 TCP connection to get all the resources from the same server. Also notice that the time to load the page is lower (almost halved):

Dissecting the Single-Packet attack with Wireshark

Security researcher James Kettle presented at DEF CON 31 (but also Black Hat and Nullcon conferences) a new technique to exploit Web race conditions which were previously hiddens (or hard to exploit).

Race condition is a vulnerability class that usually happens when multiple threads are trying to access/modify shared data. So, if we are able to send 2 (or more) requests that can interact with the same shared resource at the same time (this is what we call a “race window”), a race condition happens and this can lead to high impact.

The classic example is an online shop which allows you to use a free 20% discount coupon: you should be able to use the coupon only once. However, if the application is vulnerable to race condition, we can craft multiple requests and let them arrive to the server as close as possible which will have the side-effect of applying the coupon multiple times. This problem applies also to several other attacks such as:

-

transferring money in excess of your account balance

-

rating a product multiple times

-

bypassing CAPTCHA limitations

-

bypassing anti-bruteforce and rate-limits systems

So far it was really hard to exploit those kind of vulnerabilities, because several factors can negatively affect the attack:

- the network jitter latency: the discrepancy between the time the request is sent and the time the request arrives to the target server can change from request to request

- the server internal latency: an additional internal latency due to several factors for example the OS process scheduling. Usually this is less relevant compared to the network jitter latency

However, the researcher released a tool that uses a technique called “single-packet attack” that can exploit web race conditions in a more reliable way. This technique is based on some new capabilities introduced by HTTP/2, but the main idea is based on a previously known technique called “last-byte sync” that applies to HTTP/1.1.

“Last-byte sync” is based on the following considerations: a web server will start processing an HTTP request only when all the client’s data has been received. So, to achieve our goal, which is to let the server process our requests concurrently (to hit the “race window”), we can do the following:

- prepare multiple HTTP requests and for each of them send all the data except the last byte

- send the last byte of each request in parallel

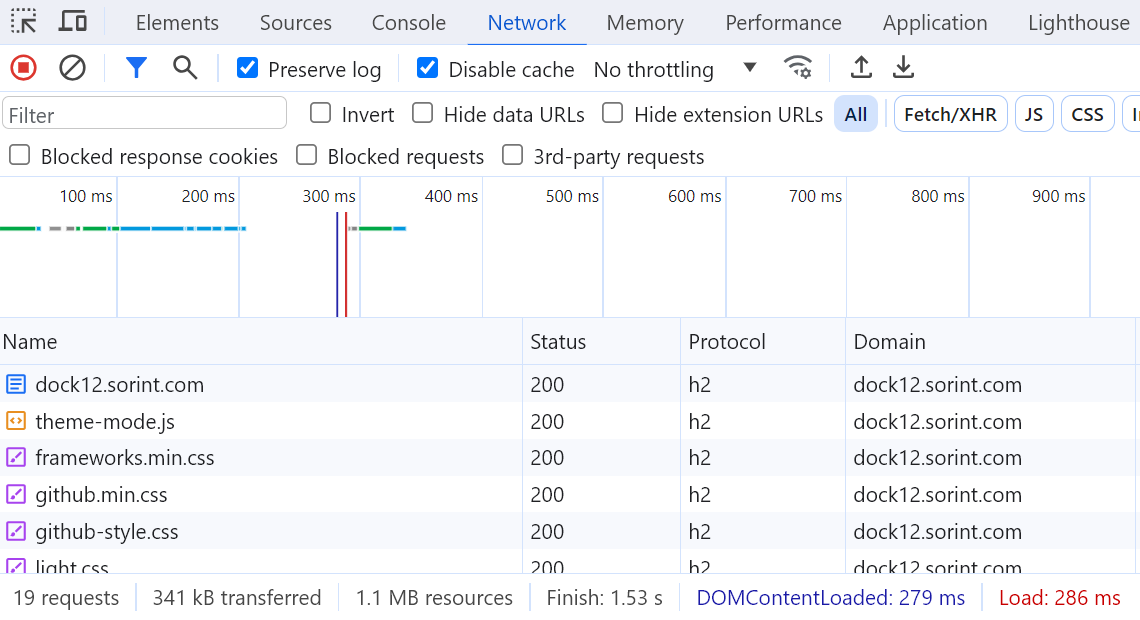

This would reduce the network jitter, because the last TCP segment of each HTTP request only needs to carry 1 byte of payload. We can see “last-byte sync” technique in action with the following Wireshark capture (I’ve used the “Send in group parallel (last-byte sync)” option using BurpSuite repeater to simulate the attack):

As you can see from the Wireshark capture, the HTTP request (in this case a simple GET) has been splitted in 2 TCP segments (frame #98 and frame #100), where the second one only carries 1 byte payload, the last byte of the request which will trigger the server to start processing it.

This was a useful and nice technique (the best one known for testing race condition in HTTP/1.x), however the researcher was able to improve it by relying on some HTTP/2 enhancements.

Thanks to multiplexing, in HTTP/2 it’s actually possible to create a single TCP packet which includes 2 complete HTTP requests (including headers and data). This aspect was already useful in exploiting some “timing attacks” (take a look here if you want to know more) but in case of Web race condition we need more than 2 parallel requests (due to server side jitter). So the security researcher had the great idea of combining these 2 techniques togheter, resulting in the following algorithm:

- disable the

TCP_NODELAYoption: in order to avoid sending small outgoing packets (which can affect performances) the TCP stack uses the Nagle’s algorithm. By disabling this option we force the OS to buffer the packets, because we want to have complete control over what and when a packet is sent to the network - for each request without a body: send the headers and withhold an empty

DATAframe - for each request with a body: send the headers and send the body except the last byte. Withhold a

DATAframe with the last byte - wait 100ms and send a

PINGframe (this is a trick to ensure that the initial frames have been sent and to ensure that the OS will place all the final frames in a single packet) - send the last

DATAframes of each request

With this technique is possible to complete about 30 HTTP/2 requests in a single packet, hence completely eliminating network jitter.

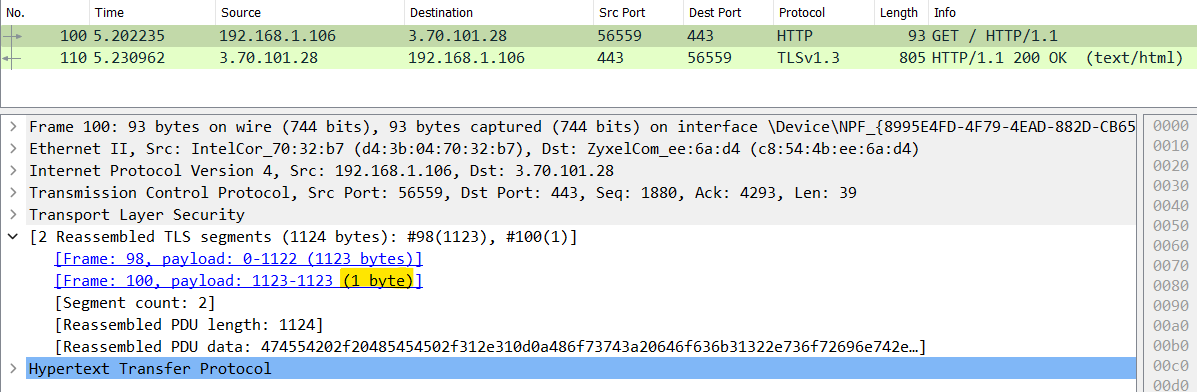

Let’s take a look at the Wireshark capture when performing the “single-packet attack”. For the sake of simplicity, in this example I’ve just sent 2 HTTP requests (2 GET requests to https://dock12.sorint.com):

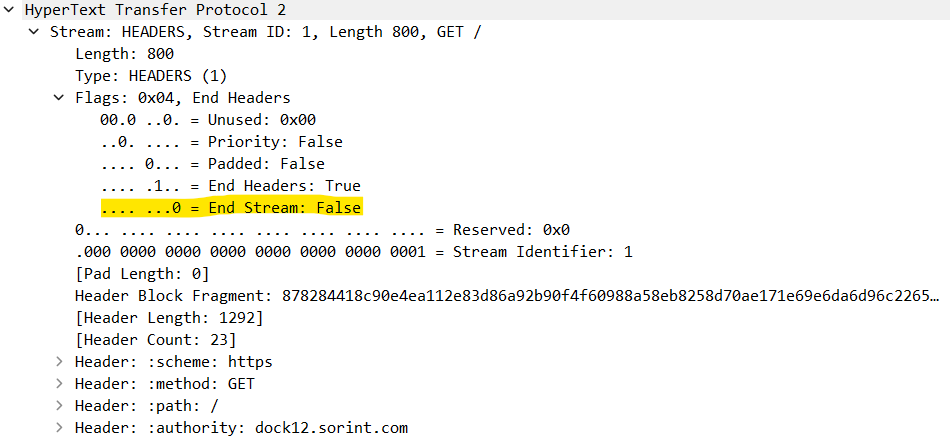

We can notice 2 streams (with ID 1 and 3) of type HEADERS in a single TCP packet (also additional control headers such as SETTINGS and WINDOW_UPDATE), one for each GET request. If we expand one of them, we notice that the flag End Stream has not been set despite the GET request doesn’t have any body (allowing us to withhold a “fake” empty DATA frame and let the server wait for some additional data):

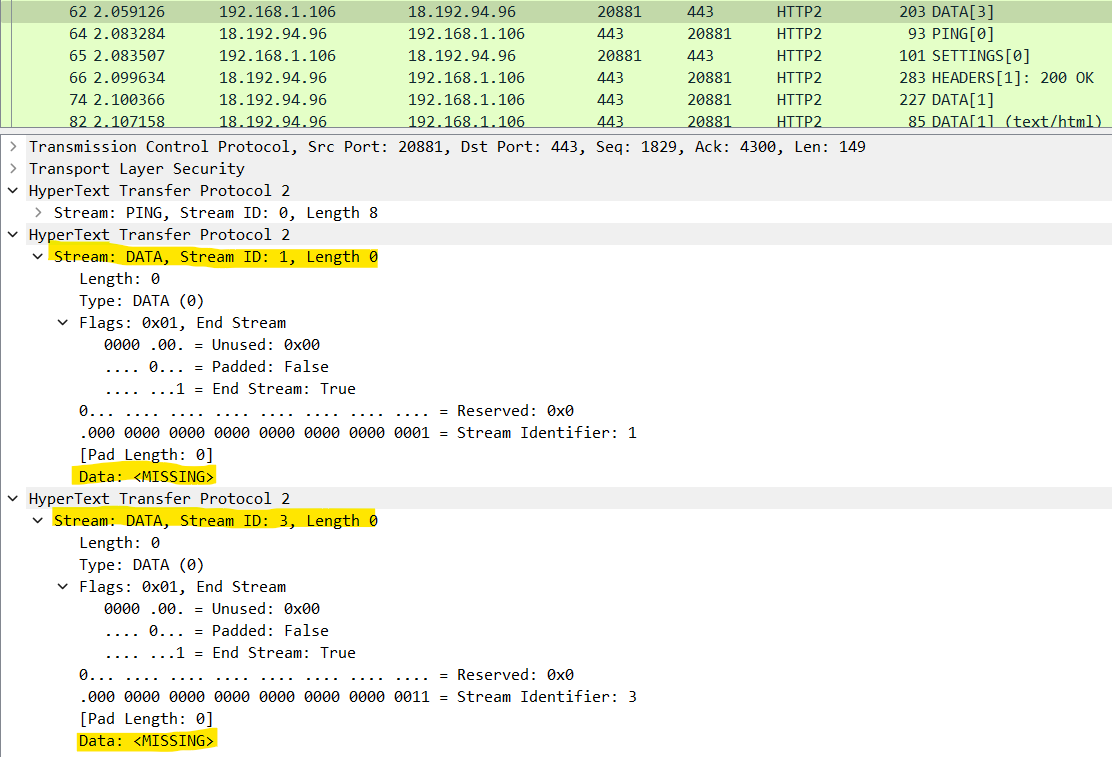

Following, the frame with number 62, includes the PING stream and the 2 empty DATA streams (one for each GET request) which will have the effect to let the server process both GET requests as soon as the single TCP packet arrives:

Using this technique, the researcher was able to send 20 HTTP/2 requests from Melbourne to Dublin (around 17000km away) with a median spread of 1ms, meaning that all the requests arrive to the target server at the same millisecond. This is definitely a game changer for people interested in finding this class of vulnerabilities which was previously known to be hard to discover and exploit. If you are interested at the implementation details of the technique, you can take a look at TurboIntruder, which is a popular BurpSuite extension developed by James Kettle.

Conclusion

In this article I wanted to show how this technique increased the actual exploitability of Web race conditions, which were previously hidden or not exploitable. This gave me the opportunity to show the main differences between the HTTP versions and also to take a closer look at the “single-packet technique” by directly looking at the packet captures to watch the technique in action.